Estimation

Propagation

Continuous time

Higher relative degree

Autonomous driving and safety

Vikas Dhiman

Assistant Professor at the University of Maine

Assistant Professor at the University of Maine

Robotics and Learning

Self-driving cars

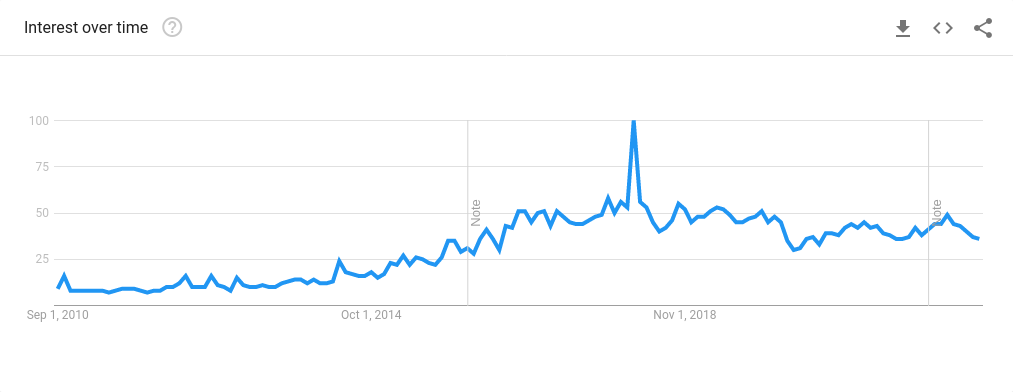

Google trends for 'Self-driving cars'

Lessons from aerospace

150 deaths per 10 billion miles

0.2 deaths per 10 billion miles

Artificial Intelligence

|

|

Why?

Bias-Variance trade-off

Bayesian Learning

How to handle uncertainty safely?

My research area

My Background

Safety

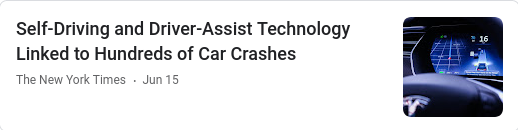

Safe control while learning

Given:

- Map and localization

- Desired trajectory as a plan

- Unsafe regions

Problem 1:

- Learn uncertainty aware robot system dynamics

Problem 2:

- Follow trajectory avoiding unsafe actions

How to learn dynamics?

-

Maximum Likelihood models.

- Koopman operators ( Mamakoukas et al (2020) )

- Model based reinforcement Learning ( Wang et al (2019) )

-

Bayesian methods

- Ensemble neural networks ( Pearce et al (2018) )

- Dropout neural networks ( Gal and Ghahramani (2016) )

- Probabilistic Backpropagation ( Hernández-Lobato and Adams (2015) )

- Gaussian Processes (Rasmussen (2003))

Gaussian Processes

|

|

Problem formulation

-

\begin{align} \min_{\bfu \in \mathcal{U}}& \text{ Cost function } \\ \qquad\text{s.t.}&~~\bbP\bigl( \text{ Safety constraint } \bigr) \ge 1-\epsilon, \end{align}

Problem 1

- \begin{align} \dot{\bfx} = F(\bfx) \ctrlaff \end{align}

- \[ \vect(F(\bfx)) \sim \GP(\vect(\bfM_0(.)), \bfK_0(.,.)) \]

- \[\StDat_{1:k} \triangleq [\bfx(t_1), \dots, \bfx(t_k)]\]

- \[\bfU_{1:k} \triangleq [\bfu(t_1), \dots, \bfu(t_k)]\]

- \[ \StDtDat_{1:k} \triangleq [\dot{\bfx}(t_1), \dots, \dot{\bfx}(t_k)] \]

-

Compute the posterior distribution \(\calG\calP(\vect(\bfM_k(\bfx)), \bfK_k(\bfx,\bfx'))\) of \(\vect(F(\bfx)) \mid (\StDat_{1:k}, \bfU_{1:k}, \StDtDat_{1:k})\).

Control Barrier Functions

-

For differentiable \( h(\bfx) \),

safe set is \( \calC = \{ \bfx \in \calX : h(\bfx) > 0 \} \) - Assume \( \grad_\bfx h(\bfx) \ne 0 \quad \forall x \in \partial \calC \)

- Assume system starts in safe state \( \bfx(0) \in \calC \)

-

System stays safe iff

\[ \dot{h}(\bfx) \ge - \gamma h(\bfx) \] - Ames et al (ECC 2019): \begin{multline} \text{ System stays safe } \Leftrightarrow~~\exists~\bfu = \pi(\bfx)~~\text{s.t.}\\ \mbox{CBC}(\bfx,\bfu) := [\grad_\bfx h(\bfx)]^\top F(\bfx)\ctrlaff + \gamma h(\bfx) \ge 0 \;~ \forall \bfx \in \calX. \end{multline}

Problem 2

- \begin{align} \dot{\bfx} = F(\bfx) \ctrlaff \end{align}

- \[ \vect(F(\bfx)) \sim \GP(\vect(\bfM_k(.)), \bfK_k(.,.)) \]

-

Find \(\bfu_k\) and \(\tau_k\) such that for \(\bfu(t) = \bfu_k\) \[ \mathbb{P}(\mbox{CBC}(\bfx(t),\bfu_k) \ge 0) \ge p_k \] for all \( t \in [t_k,t_k+\tau_k) \)

Approach

- Estimate posterior distribution over \(F(\bfx)\)

- Propagate uncertainty to the Safety condition.

- Extension to continuous time using Lipschitz continuity assumptions.

- Extension to higher relative degree systems.

\[

\vect(F(\bfx)) \sim \GP(\vect(\bfM_0(.)), \bfK_0(.,.))

\]

Decoupled GPs: Learn each element of \(F(\bfx)\) independently:

\[

\bfK_0(\bfx, \bfx') = \diag([\kappa(\bfx, \bfx'), \dots ])

\]

No correlation across dimensions, training data still correlated.

Corregionalization models: Alvarez et al (FTML 2012):

\[

\bfK_0(\bfx, \bfx') = \kappa(\bfx, \bfx') \boldsymbol{\Sigma}

\]

\(\Sigma \in \R^{n(1+m) \times (1+m)n}\) has too many parameters to learn

Matrix Variate Gaussian: Inspired from Sun et al (AISTATS 2017)

\[

F \sim \mathcal{MVG}(\bfM, \bfA, \bfB) \Leftrightarrow

\vect(F) \sim \calN(\vect(M), \bfB \otimes \bfA)

\]

\[

\bfK_0(\bfx, \bfx') = \bfB_0(\bfx, \bfx') \otimes \bfA

\]

Factorization assumption:

\[

\vect(F(\bfx)) \sim \GP(\vect(\bfM_0(.)), \bfB_0(.,.) \otimes \bfA)

\]

Matrix variate Gaussian Process

\(

\newcommand{\prl}[1]{\left(#1\right)}

\newcommand{\brl}[1]{\left[#1\right]}

\newcommand{\crl}[1]{\left\{#1\right\}}

\)

\begin{equation}

\begin{aligned}

\vect(F(\bfx)) &\sim \mathcal{GP}(\vect(\bfM_0(\bfx)), \bfB_0(\bfx,\bfx') \otimes \bfA)

%F(\bfx)\underline{\bfu} &\sim \mathcal{GP}(\bfM_0(\bfx)\underline{\bfu}, \underline{\bfu}^\top \bfB_0(\bfx,\bfx') \underline{\bfu}' \otimes \bfA)

\end{aligned}

\end{equation}

Given data \(\StDat_{1:k}\),

\(\StDtDat_{1:k} \),

and \( \underline{\boldsymbol{\mathcal{U}}}_{1:k} \).

\begin{equation*}

\newcommand{\ubcalU}{\underline{\boldsymbol{\calU}}}

\newcommand{\bcalM}{\boldsymbol{\calM}}

\newcommand{\bcalB}{\boldsymbol{\calB}}

\newcommand{\bcalC}{\boldsymbol{\calC}}

\begin{aligned}

&\bfM_k(\bfx) \triangleq \bfM_0(\bfx) +

\left( \dot{\bfX}_{1:k} - \bcalM_{1:k}\ubcalU_{1:k}\right) \left(\ubcalU_{1:k}\bcalB_{1:k}(\bfx)\right)^\dagger

\\

&\bfB_k(\bfx,\bfx') \triangleq \bfB_0(\bfx,\bfx')

-

\bcalB_{1:k}(\bfx)\ubcalU_{1:k} \left(\ubcalU_{1:k}\bcalB_{1:k}(\bfx')\right)^\dagger

\\

&\left(\ubcalU_{1:k}\bcalB_{1:k}(\bfx)\right)^\dagger

\triangleq

\left(\ubcalU_{1:k}^\top\bcalB_{1:k}^{1:k}\ubcalU_{1:k} + \sigma^2 \bfI_k\right)^{-1}\ubcalU_{1:k}^\top\bcalB_{1:k}^\top(\bfx).

\label{eq:mvg-posterior}

\end{aligned}

\end{equation*}

Inference on MVGP:

\begin{align}

\vect(F_k(\bfx_*)) &\sim

\mathcal{GP}(\vect(\bfM_k(\bfx_*)), \; \bfB_k(\bfx_*,\bfx_*') \otimes \bfA).

\\

F_k(\bfx_*)\underline{\bfu}_* &\sim

\mathcal{GP}(\bfM_k(\bfx_*)\underline{\bfu}_*, \;

\underline{\bfu}_*^\top\bfB_k(\bfx_*,\bfx_*')\underline{\bfu}_*\otimes\bfA).

\end{align}

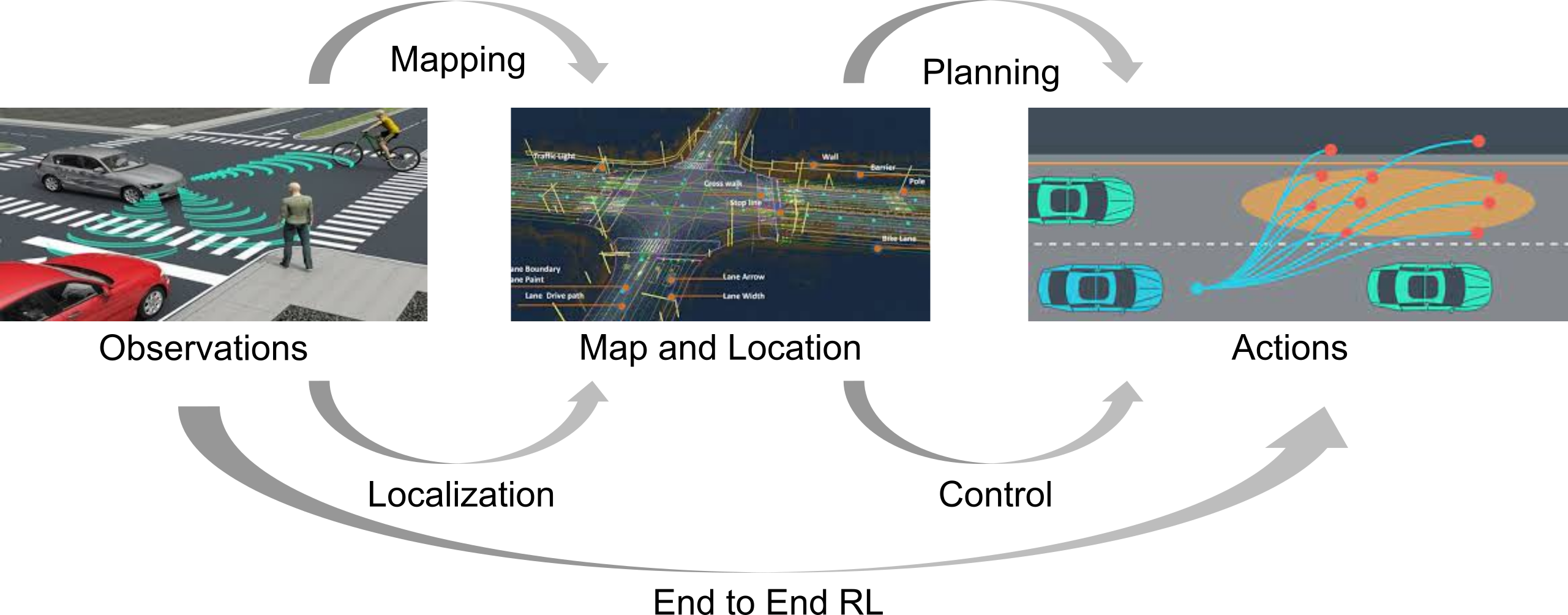

Learning Experiments

- \begin{align} \begin{bmatrix} \dot{\theta} \\ \dot{\omega} \end{bmatrix} = \underbrace{\begin{bmatrix} \omega \\ -\frac{g}{l} \sin(\theta) \end{bmatrix}}_{f(\bfx)} + \underbrace{\begin{bmatrix} 0 \\ \frac{1}{ml} \end{bmatrix}}_{g(\bfx)} u \end{align}

Learning Experiments

Approach

- Estimate \(F(\bfx)\) with Matrix-Variate Gaussian Process

- Propagate uncertainty to the Safety condition

- Extension to continuous time using Lipschitz continuity assumptions.

- Extension to higher relative degree systems.

Uncertainty propagation to CBC

- \[ \mbox{CBC}(\bfx, \bfu)= \grad_\bfx h(\bfx)F_k(\bfx)\ctrlaff + \alpha(h(\bfx)) \]

- Recall: \begin{equation} F_k(\bfx_*)\underline{\bfu}_* \sim \mathcal{GP}(\bfM_k(\bfx_*)\underline{\bfu}_*, \underline{\bfu}_*^\top\bfB_k(\bfx_*,\bfx_*')\underline{\bfu}_*\otimes\bfA). \end{equation}

- Lemma : \[ \mbox{CBC}(\bfx, \bfu) \sim \GP(\E[\mbox{CBC}], \Var(\mbox{CBC})) \] \begin{align} \label{eq:parametofpi5543} \E[\mbox{CBC}_k](\bfx, \bfu) &= \nabla_\bfx h(\bfx)^\top \bfM_k(\bfx)\underline{\bfu} + \alpha(h(\bfx)),\\ \Var[\mbox{CBC}_k](\bfx, \bfx'; \bfu) &= \underline{\bfu}^\top\bfB_k(\bfx,\bfx')\underline{\bfu} \nabla_\bfx h(\bfx)^{\top}\bfA\nabla_\bfx h(\bfx') \end{align} Note: mean and variance are Affine and Quadratic in \( \bfu \) respectively.

Deterministic condition for controller

-

\begin{align} \min_{\bfu_k \in \mathcal{U}}& \text{ Cost function } \\ \qquad\text{s.t.}&~~\bbP\bigl( \text{ Safety constraint } \mid \bfx_k,\bfu_k \bigr) \ge 1-\epsilon, \end{align}\begin{align} \min_{\bfu_k \in \mathcal{U}}& \text{ Quadratic cost function } \\ \qquad\text{s.t.}&~~\bbP\bigl( \style{color:red}{\mbox{CBC}(\bfx_k, \bfu_k) > \zeta > 0} \mid \bfx_k,\bfu_k \bigr) \ge 1-\epsilon, \end{align}

- Safe controller (an SOCP): \begin{align} \min_{\bfu_k \in \mathcal{U}}& \text{ Quadratic cost function } \\ \qquad\text{s.t.}\qquad& \cssId{highlight-current-red-1}{\class{fragment}{ \E[\CBC] - \zeta \ge \sqrt{2\Var(\CBC)(\erf^{-1}(2\epsilon-1))^2} }} \end{align}

Approach

- Estimate \(F(\bfx)\) with Matrix-Variate Gaussian Process

- Propagate uncertainty to the Control Barrier condition.

- Extension to continuous time using Lipschitz continuity assumptions.

- Extension to higher relative degree systems.

Safety beyond triggering times

- So far: \begin{align} \min_{\bfu_k \in \mathcal{U}}& \|\bfR(\bfx) (\bfu_k - \pi_\epsilon(\bfx_k) \|_2^2 \\ \qquad\text{s.t.}&~~ \bbP\bigl( \mbox{CBC}(\style{color:red}{\bfx_k}, \bfu_k) > \style{color:red}{\zeta} \mid \bfx_k,\bfu_k \bigr) \ge \style{color:red}{1-\epsilon}, \end{align}

- Next: \begin{align} \min_{\bfu_k \in \mathcal{U}}& \|\bfR(\bfx) (\bfu_k - \pi_\epsilon(\bfx_k) \|_2^2 \\ \qquad\text{s.t.}&~~ \bbP\bigl( \mbox{CBC}(\style{color:red}{\bfx(t)}, \bfu_k) > \style{color:red}{0} \mid \bfx_k,\bfu_k \bigr) \ge \style{color:red}{p_k}, \qquad \style{color:red}{\forall t \in [t_k, t_k + \tau_k)} \end{align}

Safety beyond triggering times

- Assume Lipschitz continuity of dynamics: \begin{align} \textstyle \label{eq:smoth23} \bbP\left( \sup_{s \in [0, \tau_k)}\|F(\bfx(t_k+s))\ctrlaff_k -F(\bfx(t_k))\ctrlaff_k\| \le L_k \|\bfx(t_k+s)-\bfx_k\| \right) \ge q_k:=1-e^{-b_kL_k}. \end{align}

- Assume Lipschitz continuity of \( \alpha(h(\bfx)) \): \begin{align} \label{htym6!7uytf} |\alpha \circ h(\bfx(t_k+s))-\alpha \circ h(\bfx_k)| \le L_{\alpha \circ h} \|\bfx(t_k+s)-\bfx_k\|. \end{align}

- \[ \sup_{s \in [0, \tau_k)} \| \grad_\bfx h(x(t_k + s)) \| \le \chi_k \]

Theorem:

\[

\bbP\bigl(

\mbox{CBC}(\bfx_k, \bfu_k) > \zeta

\mid \bfx_k,\bfu_k

\bigr) \ge 1-\epsilon

\quad\Rightarrow\quad

\bbP\bigl(

\mbox{CBC}(\bfx(t), \bfu_k) > 0

\mid \bfx_k,\bfu_k

\bigr) \ge p_k, \;

\forall t \in [t_k, t_k + \tau_k)

\]

holds with \( p_k = 1-\epsilon q_k \) and

\(

\tau_k \le \frac{1}{L_k}\ln\left(1+\frac{L_k\zeta}{(\chi_kL_k+L_{\alpha \circ h})\|\dot{\bfx}_k\|}\right)

\)

Approach

- Estimate \(F(\bfx)\) with Matrix-Variate Gaussian Process

- Propagate uncertainty to the Control Barrier condition.

- Extension to continuous time using Lipschitz continuity assumptions.

- Extension to higher relative degree systems.

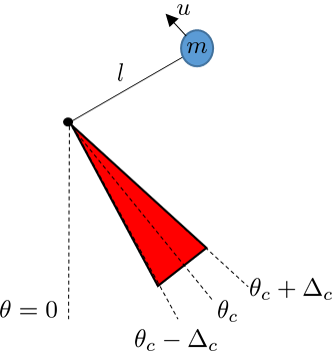

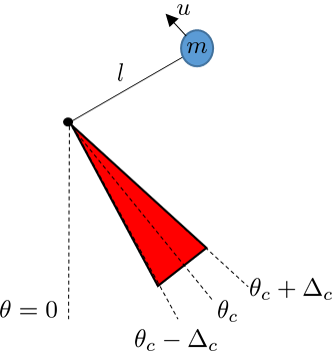

Higher relative degree CBFs

- \begin{align} \begin{bmatrix} \dot{\theta} \\ \dot{\omega} \end{bmatrix} = \underbrace{\begin{bmatrix} \omega \\ -\frac{g}{l} \sin(\theta) \end{bmatrix}}_{f(\bfx)} + \underbrace{\begin{bmatrix} 0 \\ \frac{1}{ml} \end{bmatrix}}_{g(\bfx)} u \end{align}

- \begin{align} h\left(\begin{bmatrix} \theta \\ \omega \end{bmatrix} \right) = \cos(\Delta_{col}) - \cos(\theta - \theta_c) \end{align}

- Note that \( \underbrace{\grad_\bfx h(\bfx) g(\bfx)}_{\Lie_g h(\bfx)} = 0 \)

\( \CBC(\bfx, \bfu) = \underbrace{[\grad_\bfx h(\bfx)]^\top f(\bfx)}_{\Lie_f h(\bfx)} + \underbrace{[\grad_\bfx h(\bfx)]^\top g(\bfx)}_{\Lie_g h(\bfx)} \bfu + \alpha(h(\bfx)) \)

is independent of \(\bfu\).

is independent of \(\bfu\).

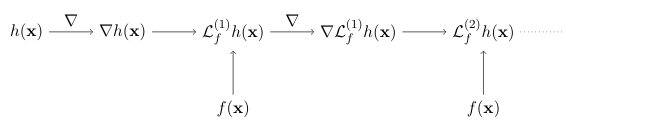

Exponential Control Barrier Functions (ECBF)

- \[ \CBCr(\bfx, \bfu) := \Lie_f^{(r)} h(\bfx) + \cssId{highlight-current-red-1}{\class{fragment}{ \underbrace{ \Lie_g \Lie_f^{(r-1)} h(\bfx) }_{\ne 0} }} \bfu + \bfk_\alpha^\top \begin{bmatrix} h(\bfx) \\ \Lie_f h(\bfx) \\ \vdots \\ \Lie_f^{(r-1)} h(\bfx) \end{bmatrix} \]

Propagating uncertainty to \( \CBCtwo \)

- \[ \CBCtwo(\bfx, \bfu) = [\grad_\bfx \Lie_f h(\bfx)]^\top F(\bfx)\ctrlaff + \bfk_\alpha^\top \begin{bmatrix} h(\bfx) & \Lie_f h(\bfx) \end{bmatrix}^\top \]

- \( \Lie_f h(\bfx) = \grad_x h(\bfx) f(\bfx) \) is a Gaussian process

-

\( \grad_\bfx \Lie_f h(\bfx) \) is a Gaussian process

-

If \( p(\bfx) \sim \GP(\mu(\bfx), \kappa(\bfx, \bfx'))\), then

\( \grad_\bfx p(\bfx) \sim \GP(\grad_\bfx \mu(\bfx), H_\bfx \kappa(\bfx, \bfx')) \)

-

If \( p(\bfx) \sim \GP(\mu(\bfx), \kappa(\bfx, \bfx'))\), then

Propagating uncertainty to \( \CBCtwo \)

- \[ \CBCtwo(\bfx, \bfu) = [\grad_\bfx \Lie_f h(\bfx)]^\top F(\bfx)\ctrlaff + \bfk_\alpha^\top \begin{bmatrix} h(\bfx) & \Lie_f h(\bfx) \end{bmatrix}^\top \]

- \( \Lie_f h(\bfx) = \grad_x h(\bfx) f(\bfx) \) is a Gaussian process

- \( \grad_\bfx \Lie_f h(\bfx) \) is a Gaussian process

- \( [\grad_\bfx \Lie_f h(\bfx)]^\top F(\bfx)\ctrlaff \) is a quadratic form of GP (not a GP )

-

\( \CBCtwo(\bfx, \bfu) \) is a quadratic form of GP.

\( \E[\CBCtwo](\bfx, \bfu) \) is still affine in \( \bfu \).

\( \Var[\CBCtwo](\bfx, \bfx'; \bfu) \) is still quadratic in \( \bfu \).

Extending to \(\CBCr\)

- \[ \CBCr(\bfx, \bfu) = [\grad_\bfx \Lie_f^{(r)} h(\bfx)]^\top F(\bfx)\ctrlaff + \bfk_\alpha^\top \begin{bmatrix} h(\bfx) & \Lie_f h(\bfx) & \dots \Lie_f^{(r-1)} h(\bfx) \end{bmatrix}^\top \]

-

-

\( \CBCr(\bfx, \bfu) \) is not a GP

\( \E[\CBCr](\bfx, \bfu) \) is still affine in \( \bfu \).

\( \Var[\CBCr](\bfx, \bfx'; \bfu) \) is still quadratic in \( \bfu \). - For \( r \ge 3 \), \(\CBCr\) statistics can be estimated by Monte-carlo methods.

Safe controller using ECBF

- \begin{align} \min_{\bfu_k \in \mathcal{U}}& \|\bfR(\bfx) (\bfu_k - \pi_\epsilon(\bfx_k) \|_2^2 \\ \qquad\text{s.t.}&~~ \bbP\bigl( \CBCr(\bfx_k, \bfu_k) > \zeta \mid \bfx_k,\bfu_k \bigr) \ge 1-\epsilon \end{align}

- Using Cantelli's (Chebyshev's one-sided) inequality

- Safe controller (an SOCP) \begin{align} \min_{\bfu_k \in \mathcal{U}}& \|\bfR(\bfx) (\bfu_k - \pi_\epsilon(\bfx_k) \|_2^2 \\ \qquad\text{s.t.}\qquad &\E[\mbox{CBC}_k^{(r)}]-\zeta \ge \sqrt{\frac{1-\epsilon}{\epsilon}\Var[\mbox{CBC}_k^{(r)}]} \end{align}

Safe controller using ECBF Experiments

- \begin{align} \begin{bmatrix} \dot{\theta} \\ \dot{\omega} \end{bmatrix} = \underbrace{\begin{bmatrix} \omega \\ -\frac{g}{l} \sin(\theta) \end{bmatrix}}_{f(\bfx)} + \underbrace{\begin{bmatrix} 0 \\ \frac{1}{ml} \end{bmatrix}}_{g(\bfx)} u \end{align}

- \begin{align} h\left(\begin{bmatrix} \theta \\ \omega \end{bmatrix} \right) = \cos(\Delta_{col}) - \cos(\theta - \theta_c) \end{align}

Ackerman Drive Simulations

Ackerman Drive Simulations

2022 upgrade: Learning \( h(\bfx) \)

|

|

Better math

Better Simulation: PyBullet

Results

|

Sample trajectories

|

Take away

- Bayesian learning enables uncertainty-awareness

- Uncertainty-aware controller can be formulated as SOCP controller

Future work

Other work

OrcVIO

Mutual Localization

Learning from Interventions

Continuous occlusion modeling

Visual Inertial Odometry

Mutual Localization

Learning from Interventions

Continuous occlusion modeling

Other work

OrcVIO

Mutual Localization

Semantic Inverse RL

Learning from Interventions

Future work

Collaborators

Questions?

OrcVIO

Mutual Localization

vikasdhiman.info

Inverse Reinforcement Learning

Learning from Interventions

- Shengyang Sun, Changyou Chen, and Lawrence Carin. Learning Structured Weight Uncertainty in Bayesian Neural Networks. In International Conference on Artificial Intelligence and Statistics (AISTATS), pages 1283–1292, 2017.

- A. D. Ames, S. Coogan, M. Egerstedt, G. Notomista, K. Sreenath, and P. Tabuada. Control barrier functions: Theory and applications. In 2019 18th European Control Conference (ECC), pages 3420–3431, June 2019. doi: 10.23919/ECC.2019.8796030.

- Mauricio A Alvarez, Lorenzo Rosasco, and Neil D Lawrence. Kernels for vector-valued functions: A review. Foundations and Trends in Machine Learning, 4(3):195–266, 2012.

- Niranjan Srinivas, Andreas Krause, Sham M Kakade, and Matthias Seeger. Gaussian process opti- mization in the bandit setting: No regret and experimental design. arXiv preprint arXiv:0912.3995, 2009.

- Quan Nguyen and Koushil Sreenath. Exponential control barrier functions for enforcing high relative- degree safety-critical constraints. In 2016 American Control Conference (ACC), pages 322–328. IEEE, 2016a.

- Louizos, Christos, and Max Welling. "Structured and efficient variational deep learning with matrix gaussian posteriors." International Conference on Machine Learning. 2016.

- Khojasteh, M. J., Dhiman, V., Franceschetti, M., & Atanasov, N. (2020). Probabilistic safety constraints for learned high relative degree system dynamics. L4DC 2020. available https://arXiv.org/abs/1912.10116.

- Learning from Interventions using Hierarchical Policies for Safe Learning J Bi, V Dhiman, T Xiao, C Xu - AAAI 2020. Available https://arXiv.org/abs/1912.02241

- Learning Navigation Costs from Demonstration in Partially Observable Environments T Wang, V Dhiman, N Atanasov. ICRA 2020. Available https://arXiv.org/abs/2002.11637

- Andrychowicz, Marcin, et al. "Hindsight experience replay." Advances in Neural Information Processing Systems. 2017.

- Mutual localization: Two camera relative 6-dof pose estimation from reciprocal fiducial observation. V Dhiman, J Ryde, JJ Corso. IROS 2013

- Learning Compositional Sparse Models of Bimodal Percepts. S Kumar, V Dhiman, JJ Corso AAAI, 2014

- Voxel planes: Rapid visualization and meshification of point cloud ensembles. J Ryde, V Dhiman, R Platt IROS, 2013

- Modern MAP inference methods for accurate and fast occupancy grid mapping on higher order factor graphs. V Dhiman, A Kundu, F Dellaert, JJ Corso ICRA 2014

- Continuous occlusion models for road scene understanding M Chandraker, V Dhiman. US Patent 9,821,813, 2017

- A continuous occlusion model for road scene understanding V Dhiman, QH Tran, JJ Corso, M Chandraker. CVPR 2016

- A Critical Investigation of DRL for Navigation V Dhiman, S Banerjee, B Griffin, JM Siskind, JJ Corso NeurIPS DRL Workshop, 2017.

- Learning Compositional Sparse Bimodal Models S Kumar, V Dhiman, PA Koch, JJ Corso. PAMI, 2017.

- (Mirowski et al. 2017) Learning to navigate in complex environments. In ICLR 2017.

- Multi-Goal Reinforcement Learning: Challenging Robotics Environments and Request for Research. Matthias Plappert and Marcin Andrychowicz and Alex Ray and Bob McGrew and Bowen Baker and Glenn Powell and Jonas Schneider and Josh Tobin and Maciek Chociej and Peter Welinder and Vikash Kumar and Wojciech Zaremba. ArXiV 2018. 1802.09464

- Kaelbling, Leslie Pack. "Learning to achieve goals." IJCAI. 1993.

- V. Dhiman, S. Banerjee, J. M. Siskind, and J. J. Corso. Learning goal-conditioned value functions with one-step path rewards rather than goal-rewards. In Submitted to ICLR, 2019. Under review.

- Zachariou, Peter et al. “SPEEDING Effects on hazard perception and reaction time.” (2011).

- Mnih, Volodymyr, et al. "Human-level control through deep reinforcement learning." Nature 518.7540 (2015): 529.

- Watkins, Christopher JCH, and Peter Dayan. "Q-learning." Machine learning 8.3-4 (1992): 279-292.

- Pearl, Judea. "Fusion, propagation, and structuring in belief networks." Artificial intelligence 29.3 (1986): 241-288.

- Jojic, Vladimir, Stephen Gould, and Daphne Koller. "Accelerated dual decomposition for MAP inference." ICML. 2010.

- Merali, Rehman S., and Timothy D. Barfoot. "Occupancy grid mapping with Markov chain monte carlo Gibbs sampling." Robotics and Automation (ICRA), 2013 IEEE International Conference on. IEEE, 2013.

- Shayle R Searle and Marvin HJ Gruber.Linear models. John Wiley & Sons, 1971

- Kehan Long, Vikas Dhiman, Melvin Leok, Jorge Cortés, Nikolay Atanasov: Safe Control Synthesis With Uncertain Dynamics and Constraints. IEEE Robotics Autom. Lett. 7(3): 7295-7302 (2022)

Bibliography

Autonomous driving and safety: Vikas Dhiman